Faster, longer programs, and optimisation

I'll deal with general issues first, then continue towards the particular. Some of the easier things to do also result in the biggest improvements

How computers work

Memory

There's a memory hierarchy - the smallest, fastest memory is in the CPU.

Much more memory is on the disc, but it's slower

There's a memory hierarchy - the smallest, fastest memory is in the CPU.

Much more memory is on the disc, but it's slower

- CPU, registers

- L2 cache

- RAM, swap

- Disk, disk cache

Very roughly speaking, the CPU is 8* faster than the L2 cache which is 256* faster than RAM which is 80* faster than Disc.

Best case? (Doing the same operations in a different order may triple the speed of calculation).

Worst case?

CPU

Nowadays there are "dual-core" CPUs, and computers can have more than one CPU (our Linux Servers each have 4). Unless you're a low-level programmer though, you won't be able to take explicit advantage of this potential for parallel programming (unless you're a matlab user - see Matlab Parallelisation). See the Parallel Programming page if you're interested. If you're writing programs that use huge amounts of memory, it's worth knowing whether the CPU's are "32 bit" or "64 bit". The biggest memory address that a "32 bit" CPU can access is about 4G at the very best, so there's no chance of having arrays that big. The memory range of a "64 bit" CPU is far greater - the teaching system machines have 64 bit CPUs.

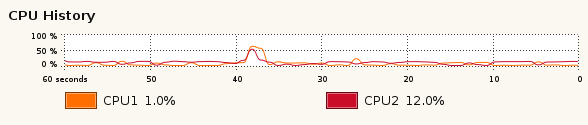

On the teaching system you can get a rough idea of a programs' CPU and memory

usage by running the gnome-system-monitor program (it's in the Applications/System Tools menu of the taskbar). Here's the CPU part of the monitor's output for a DPO machine.

On the teaching system you can get a rough idea of a programs' CPU and memory

usage by running the gnome-system-monitor program (it's in the Applications/System Tools menu of the taskbar). Here's the CPU part of the monitor's output for a DPO machine.

Especially for specialised number-crunching areas, power can be obtained fairly cheaply. Some groups in the department use big clusters of cheap machines to run programs, but unless you belong to those groups you won't be able to use these machines. Alternatively, in the Guardian (Feb 2008) an academic said he could run a simulation 130 times faster on a PlayStation 3 (which has several CPUs/accelerators) than on his desktop PC. Some CUED people use CUDA (a library that uses an nVidia board) to get 10x speed-up. There's a MATLAB plug-in for CUDA

Big Issues

Do estimates of time/space requirements as early as possible. Ask yourself

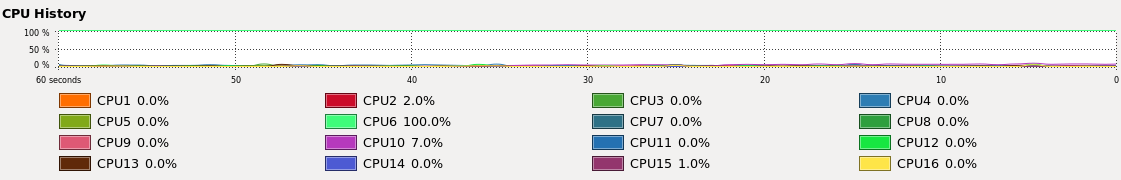

Can I find a faster machine? - On the

Teaching System there are faster machines than those in the DPO. The monitor output on the right is from one of a group of machines called ts-access with 4 quad-core CPUs.

Use "uname -a"

on a Unix machine to find out the machine type.

Can I find a faster machine? - On the

Teaching System there are faster machines than those in the DPO. The monitor output on the right is from one of a group of machines called ts-access with 4 quad-core CPUs.

Use "uname -a"

on a Unix machine to find out the machine type. - Do I need to run so many big programs? - a long time ago the university had a big central machine called phoenix that was being overworked. The heavy users were consulted to see how many more machines were required. After investigation it was discovered that many of the users were running far more than was useful.

- Have I made the most of the compiler's optimisation options? - (why not all the time? Slow, risky with bad code). Type "man g++" on linux to see what optimisation is done.

- Am I using the fastest Algorithms and most suitable data structures? - sorting, lists, etc; num of operations O(nlogn); cache-friendly?

- Is it too late to change language? - (Java? Matlab compilation?). Mixing languages? Not all of a program is going to be number-crunching - you need to get the

data in, call other programs, and display the results. The computing

service suggest python as the "glue" language. At CUED we also have

matlab, which although not free has good graphics capabilities and

thousands of functions. Both matlab and python may be fast enough to

do all but the most intensive parts of your work.

You may well use Fortran or C++ for the number crunching work - see- Techniques for Scientific C++ (Todd Veldhuizen)

- Scientific Computing: C++ versus Fortran

Identifying the bottleneck

Slow graphics? Slow disc access? Is a particular routine slow? On Unix,

typing top will give some statistics about programs that are running

(how big they are, how much CPU they're using). To get fine-grain information you

need to use a "profiler". On matlab this is easy. If your main program is

called snail, then typing the following inside matlab -

Slow graphics? Slow disc access? Is a particular routine slow? On Unix,

typing top will give some statistics about programs that are running

(how big they are, how much CPU they're using). To get fine-grain information you

need to use a "profiler". On matlab this is easy. If your main program is

called snail, then typing the following inside matlab -

profile on snail profile plot profile off

will display a histogram showing a function-by-function breakdown of performance. With fortran or C++ on Unix, you'll need to compile using the "+g" option, run your program (called snail for example), then run prof snail. You'll get output looking a little like this

%Time Seconds Cumsecs #Calls msec/call Name 32.4 0.35 0.35 __strlen20 23.1 0.25 0.60 5770000 0.00 _strlen 20.4 0.22 0.82 10000 0.02 slow 11.1 0.12 0.94 10000 0.01 fast 10.2 0.11 1.05 _mcount 1.4 0.01 1.06 _monstartup

Coding

Trade-offs - Speed/Size/Readability

- Loops - Deeply nested loops merit particular attention.

- Do nothing unnecessary in the loop.

- Keep loops small (some machines have an `instruction cache' (the DPO machines have 16k of data cache and 12k of execution cache (L1) per core) so that recent instructions can be quickly recalled. If a loop fits inside the cache, you'll benefit.

- Access arrays sequentially in memory (there's a data cache too, and paging to consider).

- as a condition compare with 0.

- `unroll' them (i.e. use a list instead of a loop)?

- 2-D arrays - row/column major (C++ is row-major)

- Passing parameters - If you're passing very large items by value, memory requirements (and hence time requirements) increase. It might be helpful to make very big data-structures global.

- Avoid recursion.

- Arithmetic - See

- Floating-Point Basics (Pete Becker)

- What Every Computer Scientist Should Know About Floating-Point Arithmetic

- Use look-up tables. sin(x)

- Use bit-shift rather than + or - rather than * or /.

- Malloc

- If you're using malloc a lot, look up the options to tune malloc.

- Reduce `paging' by keeping program small or at least stop it nonsequentially accessing memory.

- Matlab? Sparse Arrays; Vectorisation; selective rewriting as mex files

- It's important to tell the compiler as much as you can about your code so that it can optimise well. For C++, use `const' keywords whenever you can. See man pages for compiler directives. There are many - e.g. -fbranch-probabilities, -fexpensive-optimizations -ffast-math, -fno-trapping-math, -funsafe-math-optimizations, -freorder-functions, -funroll-all-loops. Using +O4 does aggressive, time-consuming optimisation.

Space

The smaller you make your data structures (and the more localised the access to them) the better. If your process (i.e. the running program) grows too big, if will run slowly and may become too big to run. Asking for more disk quota won't help. See Memory Optimisation for system-specific and language-specific details.

Running long jobs

- Running Long Programs

- When writing programs for extended use

- Use checkpoints

- Make them non-interactive

See Also

- C++: Nasty Tricks

- Basic optimising and profiling

- Faster matlab scripts

- Faster C++

- Scientific Computing

- CamGrid (a distributed computing resource based on the Condor middleware, providing a powerful computational tool for participating university members)

- An Introduction to High Performance Computing and the HPCS by Stuart Rankin